Today I read Fred Wilson’s post “Explainability”. He pointed out that the explainability is about trust, and it is a critical piece in user experience of machine learning-enabled products.

"What I want on my phone, on my computer, in Alexa, and everywhere that machine learning touches me, is a “why” button I can push (or speak) to know why I got that recommendation...This is coming. I have no doubt about it. And the companies that offer it to us will build the trust that will be critical to remaining relevant in the age of machine learning."

Technically, it is very hard to achieve the explainability of algorithms and data pipeline inside those machine learning products to the public. This is different with the context of research and development, where we should deeply understand every detail of machine learning and deep neural networks. In the research labs, we are developing a lot of visualization and network-based methods to understand how the networks learn about features and allocate weights. This article from MIT Technology Review covers lots of current research effort in the field; this publication in Distill also shows us how the deep neural network visualizes an image. However, when it comes to the real world, the math beyond the algorithms usually fail to catch the public’s interest to understand. When it comes to actual production, the complicated data infrastructure of the machine learning products usually take a few months or years to build. To explain the “actual system” will likely to drive the audience impatient and leave away. Most of the explainability stays at an 8-thousand-meter-high conceptual level, and usually it twists the reality for the sake of simplicity.

How the deep Neural Network visualize images

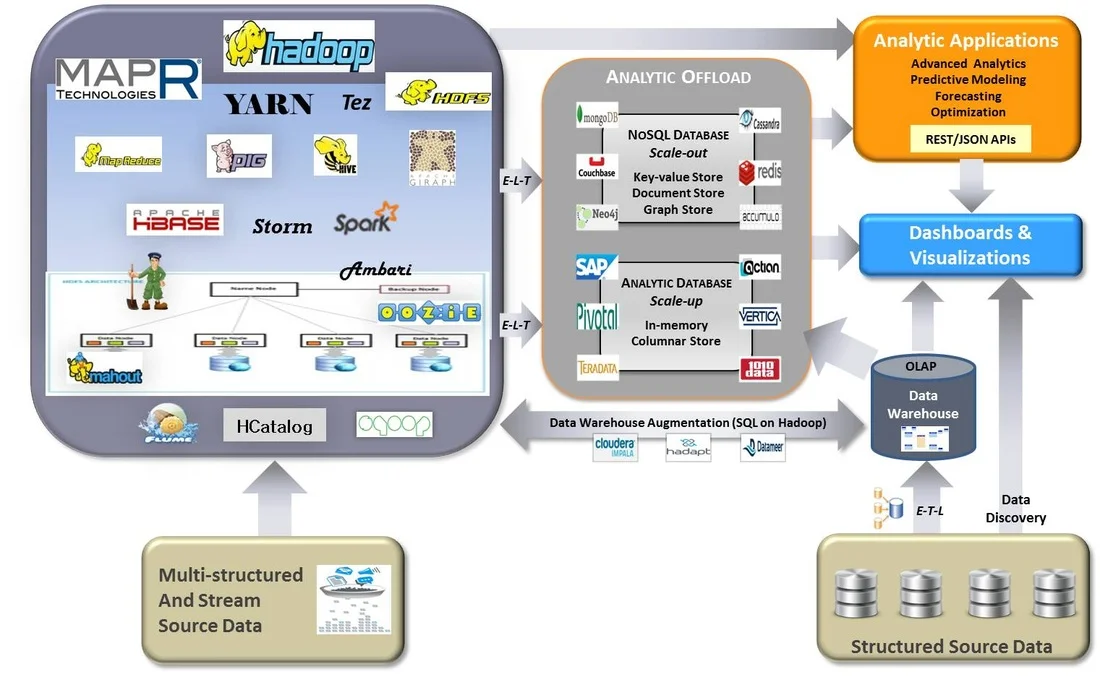

Modern Hadoop-based Data Infrastructure - The actual implementation can be more complicated

However, I do agree that a wide, successful adoption of a product depends on “trust” as an important component. It does not only apply to the machine learning products. We take a plane despite that we may not know how the aerodynamic and engines actually work, but we trust on the airline companies and aircrews. We use Google to search for websites despite that we may not know how the Google PageRank actually work, but we trust on its service to provide us with the information we actually need. For companies developing machine learning products, a key factor is to gain the users’ trust that the products will work for the best interest of the users and protect their privacy and security. Fortunately it is getting more and more awareness among companies: for example, in Shopify we have a team dedicated to build merchants’ trust in products.